Understanding AI Agents: The Intersection of Autonomy, Predetermined Logic, and Statefulness

Artificial Intelligence (AI) has evolved rapidly over the past few decades, transitioning from simple rule-based systems to complex models capable of learning, reasoning, and decision-making. Among the most intriguing developments in AI are AI Agents—systems designed to perceive their environment, make decisions, and act autonomously to achieve specific goals. In this blog post, we’ll delve deep into the world of AI Agents, exploring their core attributes and understanding how they differ from traditional programs that utilize AI technologies like Large Language Models (LLMs). We’ll culminate by defining AI Agents through three critical attributes: autonomous logic, predetermined logic, and statefulness.

Introduction to AI Agents

An AI Agent is a system that operates autonomously, perceiving its environment through various inputs to achieve specific goals. Unlike traditional programs that follow a set of predefined instructions, AI Agents are designed to make independent decisions and adapt to changing conditions.

Key Characteristics of AI Agents:

- Autonomy: Ability to operate without human intervention.

- Adaptability: Capability to learn from experiences and adjust behaviors.

- Goal-Oriented Behavior: Designed to achieve specific objectives.

- Interactivity: Interacts with the environment and possibly other agents.

AI Agents are prevalent in various domains, including robotics, virtual assistants, autonomous vehicles, and more. They are instrumental in tasks that require decision-making in dynamic environments.

The Evolution of AI Systems

To appreciate the significance of AI Agents, it’s essential to understand the evolution of AI systems:

- Rule-Based Systems:

- Early AI systems were deterministic, following explicit rules defined by programmers.

- Limited adaptability and couldn’t handle uncertainty well.

- Machine Learning Models:

- Introduction of algorithms that learn patterns from data.

- Improved performance in tasks like image recognition and natural language processing.

- Deep Learning and Neural Networks:

- Complex architectures capable of modeling intricate patterns.

- Enabled breakthroughs in areas like speech recognition and autonomous driving.

- Reinforcement Learning Agents:

- Systems that learn optimal behaviors through interactions with the environment.

- Marked the emergence of AI Agents capable of complex decision-making.

Defining AI Agents

An AI Agent is more than just a program that utilizes AI algorithms; it embodies characteristics that enable it to function independently and intelligently within its environment.

AI Agent Definition:

An AI Agent is a software (or hardware) entity that autonomously perceives its environment, processes inputs, maintains state, and takes actions to achieve specific goals, often adapting its behavior based on experiences.

Differentiating AI Agents from AI-Powered Programs:

- AI Agents: Autonomous entities with decision-making capabilities and state management.

- AI-Powered Programs: Software that uses AI algorithms (e.g., LLMs) to perform specific tasks but lacks autonomy and statefulness.

Attribute 1: Autonomous Logic

Autonomous Logic refers to the ability of a system to make decisions and take actions without requiring explicit instructions at every step. Rather than following a rigid script, the system evaluates the environment and its goals, then determines how best to proceed. This quality underpins the adaptability and resilience seen in true AI Agents.

LLM Non-Determinism and Multiple Solutions:

When AI Agents rely on Large Language Models (LLMs) or other generative components, the concept of autonomy often intersects with non-determinism. Traditional software systems produce the same output each time they are given the same input, adhering strictly to predefined logic. In contrast, LLM-based reasoning can yield different outcomes even under seemingly identical circumstances. This variation can arise from subtle changes in context, differences in the prompt, or the inherent probabilistic nature of the model’s sampling process.

For example, consider an autonomous vehicle approaching an unexpected obstacle on the road. Faced with a hazard, it must choose how to respond—perhaps braking swiftly, swerving left, or maneuvering to the right. Each action involves interpreting sensory data, weighing risks, and generating potential strategies. An LLM-based reasoning component might propose multiple plausible solutions:

- Braking: Safest if there’s ample space behind and minimal risk of a rear-end collision.

- Swerving Left: Potentially avoids the obstacle but might place the car closer to oncoming traffic.

- Swerving Right: Could bypass the hazard if the lane is clear, but introduces the complexity of moving off the intended path.

In all these scenarios, the LLM-backed logic doesn’t have a single, fixed answer. Instead, it could suggest a range of viable actions, each with its own trade-offs. Over multiple runs of the same scenario, slight variations in the model’s internal state or parameters might lead it to favor different solutions. Similarly, a conversational AI tasked with summarizing a complex document may produce varying summaries of the same text—some focusing on key points, others offering more narrative detail—depending on subtle differences in input phrasing or the random “seed” guiding its generation process.

This non-determinism doesn’t represent a flaw but rather highlights a strength of autonomous logic. It allows AI Agents to approximate the flexibility and creativity of human decision-making. The agent can adapt its approach, test different strategies, and refine its behavior based on trial, error, and feedback. However, this characteristic also introduces new challenges in predictability and verification—ensuring the AI Agent consistently makes safe and effective choices may require constraints, monitoring, and careful alignment of model outputs with desired outcomes.

By emphasizing that LLM-driven autonomous logic can be non-deterministic and open to multiple solutions, we underscore the complexity and adaptability that set AI Agents apart from traditional, rule-bound programs.

Attribute 2: Predetermined Logic

What is Predetermined Logic?

Predetermined Logic involves following a set of predefined rules or instructions specified by the programmer. The system executes tasks in a fixed manner, responding predictably to specific inputs.

Role in AI Systems

- Consistency: Ensures the system behaves in expected ways.

- Simplicity: Easier to design and implement for straightforward tasks.

- Control: Offers precise control over system behavior.

Predetermined Logic in AI Agents

While AI Agents are autonomous, they often incorporate predetermined logic as a foundation:

- Framework: Predetermined rules define the agent’s operational boundaries.

- Guidelines: Provide a structure within which the agent can make autonomous decisions.

- Safety Measures: Predetermined logic can enforce constraints to prevent undesirable actions.

Balancing Autonomy and Predetermined Logic

An effective AI Agent balances autonomous logic with predetermined logic:

- Autonomous Logic: Provides flexibility and adaptability.

- Predetermined Logic: Ensures reliability and adherence to critical rules.

Attribute 3: Statefulness

What is Statefulness?

Statefulness refers to the ability of a system to retain information about past interactions or events. A stateful system’s behavior can change based on its history and accumulated data.

Importance in AI Agents

- Context Awareness: Allows agents to consider previous actions and outcomes.

- Learning: Facilitates learning from experiences to improve future performance.

- Personalization: Enables agents to tailor interactions based on user preferences.

Statefulness in Practice

In conversational AI Agents:

- Dialogue Management: The agent remembers prior exchanges to maintain coherent conversations.

- User Profiles: Retains user data to provide personalized responses.

- Adaptation: Learns from interactions to refine its language and recommendations.

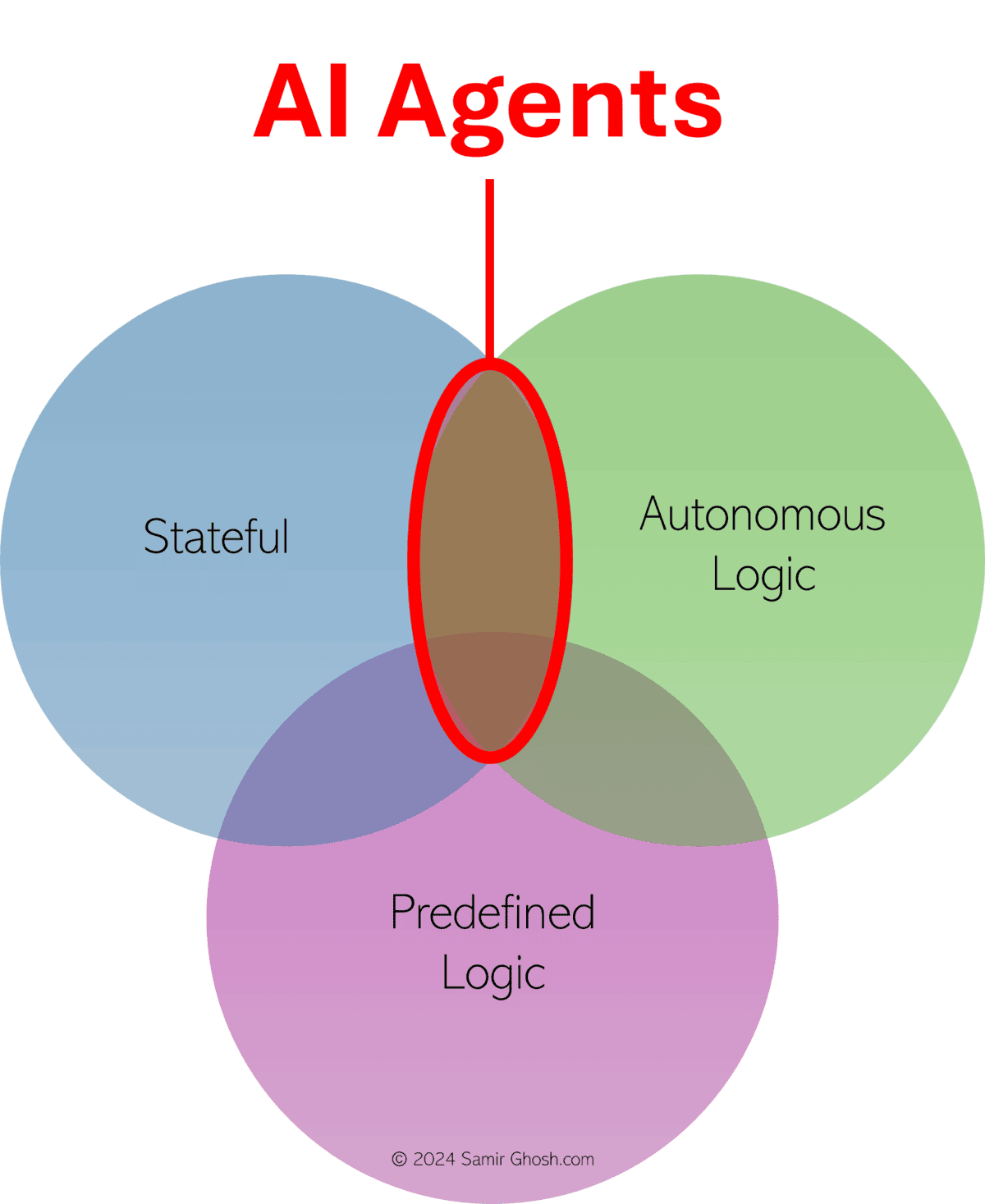

The Venn Diagram of AI Agent Attributes

To conceptualize how these three attributes define AI Agents, imagine a Venn diagram with three circles representing Autonomous Logic, Predetermined Logic, and Statefulness. The intersection of these circles represents systems that embody all three attributes—true AI Agents.

Diagram Description

- Circle 1: Autonomous Logic

- Systems that can make independent decisions.

- Examples: Reinforcement learning models, autonomous robots.

- Circle 2: Predetermined Logic

- Systems that follow set rules or algorithms.

- Examples: Rule-based systems, traditional software programs.

- Circle 3: Statefulness

- Systems that retain and utilize past information.

- Examples: Stateful web applications, database systems.

Intersection Areas

- Autonomous Logic + Statefulness (Without Predetermined Logic):

- Agents that learn and decide independently but lack predefined rules.

- Risk of unpredictable behavior without constraints.

- Autonomous Logic + Predetermined Logic (Without Statefulness):

- Systems that make decisions within set rules but don’t retain past information.

- Limited in adapting based on history.

- Predetermined Logic + Statefulness (Without Autonomous Logic):

- Systems that follow set rules and remember past states but don’t make independent decisions.

- Examples: Stateful applications without AI capabilities.

Center Intersection: AI Agents

- Incorporate Autonomous Logic, Predetermined Logic, and Statefulness.

- Characteristics:

- Decision-Making: Autonomously decide actions.

- Guided by Rules: Operate within predefined constraints.

- Contextual Awareness: Utilize past information for better outcomes.

Visual Representation

Imagine the Venn diagram where:

- The center represents AI Agents.

- The peripheral areas represent systems lacking one or more attributes.

- AI Agents are uniquely defined by the combination of all three attributes.

Examples of AI Agents in Action

1. Virtual Personal Assistants (e.g., Siri, Alexa)

- Autonomous Logic: Understand and execute user commands independently.

- Predetermined Logic: Operate within the capabilities defined by developers.

- Statefulness: Remember user preferences and past interactions.

2. Autonomous Drones

- Autonomous Logic: Navigate and make real-time decisions.

- Predetermined Logic: Follow flight rules and safety protocols.

- Statefulness: Monitor battery levels, mission progress.

3. Recommendation Systems (Advanced)

- Autonomous Logic: Generate personalized recommendations.

- Predetermined Logic: Use algorithms to filter and rank content.

- Statefulness: Learn from user behavior over time.

4. Conversational Agents with Memory

- Autonomous Logic: Engage in dialogue, determine next responses.

- Predetermined Logic: Adhere to conversational rules and ethical guidelines.

- Statefulness: Maintain context across multiple exchanges.

Conclusion

AI Agents represent a significant leap in artificial intelligence, embodying systems that are not only capable of performing tasks but also of making decisions, learning from experiences, and adapting to new situations. By understanding the three critical attributes—autonomous logic, predetermined logic, and statefulness—we can better appreciate what defines an AI Agent and how it differs from traditional AI-powered programs.

Key Takeaways:

- Autonomous Logic empowers AI Agents to make independent decisions.

- Predetermined Logic provides a necessary framework and safety net.

- Statefulness allows agents to retain information, enhancing adaptability and personalization.

An AI Agent is thus a system at the intersection of these attributes, capable of functioning intelligently and autonomously within its environment while adhering to essential guidelines and learning from its experiences.

References

- Russell, S., & Norvig, P. (2016). Artificial Intelligence: A Modern Approach. Pearson Education.

- Sutton, R. S., & Barto, A. G. (2018). Reinforcement Learning: An Introduction. MIT Press.

- Wooldridge, M. (2009). An Introduction to MultiAgent Systems. John Wiley & Sons.

- Nilsson, N. J. (1998). Artificial Intelligence: A New Synthesis. Morgan Kaufmann.

Thank you for reading! If you have any questions or comments, feel free to share them below.

Leave A Comment