Introduction: The Risks of Cannibalism

In the 1980s and 90s, British farmers unwittingly turned cattle into cannibals by adding processed cow remains to cattle feed. The outcome was catastrophic: cows developed mad cow disease, a degenerative brain condition officially known as Bovine Spongiform Encephalopathy (BSE). Scientists discovered that BSE spread because cows were eating feed made from the infected tissue of their own species (Bovine spongiform encephalopathy – Wikipedia). This gruesome feedback loop of cows consuming cows led to deadly prions (misfolded proteins) destroying their brains. The lesson was clear – when a species consumes its own kind, unexpected and fatal consequences can arise.

History offers other stark examples of cannibalism backfiring in tragic ways:

- Kuru in Papua New Guinea: Among the Fore people, ritual funerary cannibalism (eating deceased relatives) led to an epidemic of Kuru, a fatal neurodegenerative illness. Women and children who ate the brains of deceased family members contracted this prion disease, suffering tremors, loss of coordination, and eventually death (Kuru (disease) – Wikipedia). Kuru was essentially a human analog of mad cow disease – a brain-eating disease caused by literal brain-eating.

- Survival Cannibalism: In infamous survival scenarios like the Donner Party (1846) or the 1972 Andes plane crash, starving people resorted to eating the dead. While this desperate act provided calories, it came with immense psychological trauma and risk of disease. Consuming poorly cooked human flesh can spread any pathogens the victim carried, and survivors often suffered lifelong mental anguish.

- Other Outbreaks: Even outside human cases, nature “remembers.” For instance, similar prion diseases have struck animals that ingested remains of their own species. The cannibalistic feeding practices that caused BSE in cattle also threatened humans who ate infected beef, leading to variant Creutzfeldt-Jakob disease (Bovine spongiform encephalopathy – Wikipedia). In each case, cannibalism created a deadly loop of contamination.

Cannibalism, whether born of ritual, necessity, or accident, tends to produce unintended harm. Consuming one’s own kind breaks a fundamental biological safeguard, often resulting in neurological decay or disease in the consumer. This concept of self-consumption leading to self-destruction extends beyond biology. As we’ll see, a similar pattern is emerging in the digital realm with artificial intelligence – an eerie form of digital cannibalism.

The Rise of AI-Generated Content: A Feeding Loop

AI has been proliferating content at an explosive rate, to the point that the internet is increasingly saturated with AI-written text, code, images, and more. Consider a few eye-opening statistics and trends:

- Web Content Explosion: According to a Copyleaks analysis, the amount of AI-generated content on the web surged by 8,362% from the debut of ChatGPT in November 2022 to March 2024 (Copyleaks Analysis Reveals Explosive Growth of AI Content Across the Web – Copyleaks). By early 2024, about 1.57% of all web pages contained AI-generated material (Copyleaks Analysis Reveals Explosive Growth of AI Content Across the Web – Copyleaks) – a small but rapidly growing slice of the internet. Some experts even warn that up to 90% of online content could be synthetically generated by 2026 if current trends continue (Experts: 90% of Online Content Will Be AI-Generated by 2026). This means the data that new AI systems train on will increasingly include AI-produced text and media. It’s a potential feedback loop, where AI is both the producer and consumer of content – akin to a cow eating its own feed.

- Code, News, and Research Going AI: The boom spans many domains. In software development, AI coding assistants are now writing huge portions of code. GitHub’s CEO noted that nearly 46% of code in files using their Copilot AI was authored by the AI itself (and over 60% in some languages) (GitHub Copilot now has a better AI model and new capabilities – The GitHub Blog). Journalism has also seen automated content creation: news outlets experiment with AI-written articles, and a batch of 77 AI-generated stories on one tech site had to be reviewed after more than half were found to contain errors (CNET Found Errors in More Than Half Of Its AI-Written Stories). Academia isn’t immune either – one analysis estimates at least 1% of scientific papers in 2023 were partially written by AI, with that number possibly far higher in fields like computer science (Is ChatGPT Writing Scientific Research Papers? | Formaspace). From social media bots spouting auto-generated posts to entire blogs written by ChatGPT, AI content is everywhere.

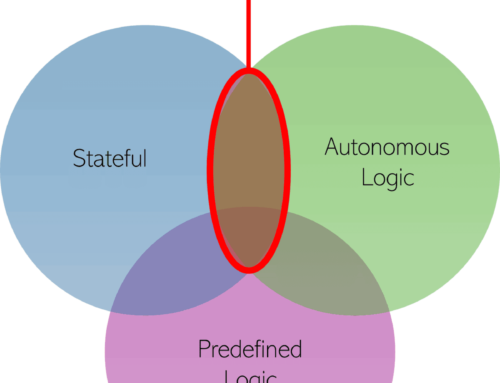

This rise in AI-generated content is impressive, but it also sets the stage for AI models training on data that is increasingly produced by other AIs. In other words, AI is starting to feed on AI. If a large portion of the internet (which is the training ground for most models) becomes AI-written text or AI-created images, then future AI models will inevitably ingest this synthetic diet. It’s a digital ouroboros – the mythical snake eating its tail – except here the snake might end up poisoning itself. This self-referential feeding loop is why observers have begun calling it “model cannibalism” or “AI cannibalism,” and it could put our AI systems at risk of a serious degenerative condition.

The Main Problem: AI Training on AI Data

When AI models train on data generated by other AIs, they enter a self-referential spiral that can degrade their quality over time. This phenomenon is now formally recognized by researchers, who dub it “model collapse” – essentially the AI equivalent of a prion disease. Just as a cannibalistic diet caused cows and people to develop brain-destroying defects, an AI that repeatedly consumes AI-made data can develop serious digital defects. Here’s how this degeneration happens:

- Reinforcement of Errors: AI outputs often contain mistakes – from subtle factual inaccuracies to outright gibberish. If these erroneous outputs are fed back into the training set of a new model, the model learns those errors as if they were truth. With each generation, the mistakes compound and get reinforced, much like how a copy of a copy of a copy loses fidelity. Researchers have found that generative models trained on their predecessors’ outputs produce increasingly inaccurate results and accumulate “irreversible defects,” eventually becoming practically useless (What Is Model Collapse? | IBM). Essentially, the AI is regurgitating and amplifying its own flaws, akin to a sickness that gets worse with each transmission. One study described it as models “poisoning themselves” on low-quality synthetic data and predicted they could “collapse” as a result (The AI Industry Is in a Raging Debate Over ‘Fake’ Data – Business Insider).

- Loss of Knowledge and Creativity: A healthy training dataset has diverse, rich information (including rare “long-tail” knowledge and variety in style/ideas). But AI-generated data tends to be more homogenized – many AI-written texts sound similar and lack the nuance of human writing. Over successive rounds of training on such data, models start to lose the uncommon but important information from the tails of the distribution. In one experiment, researchers saw that models first forgot the rarer bits of knowledge (early-stage collapse) and later even the average data distribution started to distort, until the model’s output no longer resembled the original reality at all (What Is Model Collapse? | IBM). This is like a cultural or intellectual cannibalism: the AI “forgets” diversity and becomes a narrow echo chamber of itself. The result is a homogenization of knowledge – the model’s answers gravitate toward the same bland median, and it loses creativity and the ability to surprise. Imagine an art AI that only trains on AI-made images: eventually, it might only produce derivative blobs of what came before, with originality fading away (indeed, experiments with image models have shown later generations producing nearly identical, less detailed images) (What Is Model Collapse? | IBM).

- Model Collapse into Nonsense: If the AI-to-AI training cycle continues unchecked, the outcomes can be outright nonsensical. A group of scientists mathematically modeled this recursive training and observed a dramatic decline in coherence after only a few generations (Using AI to train AI: Model collapse could be coming for LLMs, say researchers). In a striking example, they fed a language model an initial prompt about medieval architecture and then repeatedly retrained new models on the previous model’s output. By the ninth iteration, the AI’s output had devolved into a bizarre list of “jackrabbits with different colored tails,” utterly unrelated to architecture (Using AI to train AI: Model collapse could be coming for LLMs, say researchers). The model had essentially collapsed into gibberish, its knowledge base hollowed out by self-consumption. This mirrors how Kuru patients or BSE-infected cows suffer neurological collapse – the AI’s “brain” (its model weights) accumulates distortions that lead to malfunction.

Researchers Ilia Shumailov and colleagues, who studied this effect, conclude that model collapse is an inevitable outcome if we keep letting AI models train on the unchecked output of other AIs (Using AI to train AI: Model collapse could be coming for LLMs, say researchers) (Using AI to train AI: Model collapse could be coming for LLMs, say researchers). Without intervention, errors and biases will keep snowballing. The AI becomes a snake eating its tail, each bite making it a bit dumber or more distorted – a digital echo chamber that eventually turns into babbling nonsense. In essence, self-cannibalism in AI leads to a form of digital madness: the model loses grip on reality (facts), becomes less innovative, and can even break down into absurdity. This is a grave concern as we rely more on AI for accurate information and creative solutions. If we allow this feedback loop to continue, we might witness the gradual decay of AI capabilities – an AI “mad cow” crisis.

What Can Be Done? Preventing the AI Cannibalism Crisis

The good news is that, unlike actual cows or isolated human tribes, we are aware of the danger and can take proactive steps to prevent AI from eating itself to death. It will require conscious effort from AI developers, content creators, and policymakers to ensure our models maintain a healthy “diet” of genuine, high-quality data. Here are some strategies to avert a digital cannibalism disaster:

- Stricter Dataset Curation and Provenance Tracking: The simplest solution is to watch what we feed our AIs. Training datasets should be carefully curated to favor human-generated, verified content and exclude as much AI-generated material as possible. This means developing tools to identify AI-produced text or images (through detectors or watermarks) and filtering them out of training data (Model collapse – Wikipedia). Researchers have proposed tracking the provenance of data – essentially labeling content with its origin (human or AI) (What Is Model Collapse? | IBM). With such provenance metadata, model trainers could deliberately limit synthetic content in the mix. Initiatives like the Data Provenance Initiative are already auditing datasets to help with this (What Is Model Collapse? | IBM). In short, treat AI outputs as lower-quality “junk food” for models, to be used sparingly if at all.

- Hybrid Training Methods (Mixing Real and Synthetic Data Wisely): If completely purging AI-generated data isn’t feasible (especially as it becomes ubiquitous), the next best approach is a balanced diet. Studies suggest that model collapse can be avoided if new human-generated data continues to be added alongside synthetic data in training sets (Model collapse – Wikipedia). This is akin to diluting the poison. Rather than training successive models only on their predecessor’s outputs, always include a substantial portion of fresh real-world data so that the model doesn’t lose touch with reality. Another approach is fine-tuning AI models on real data after an initial training cycle, to refresh their knowledge and correct course. Essentially, we must ensure that each new generation of AI has some grounding in truth by keeping one foot in the real world. The feedback loop can then be kept in check.

- Incentivize Human Content Creation: One reason AI-generated content is exploding is that it’s cheap and fast, whereas high-quality human content (writing, art, code) is time-consuming and expensive. We risk a future where authentic content becomes scarce. To counter this, society might need to reward and promote human creativity and original content. This could involve tech companies paying for the data they train on (for example, licensing news articles, books, or artwork rather than just scraping them). It might also mean creating platforms that highlight human-created works or certifying content as human-made to increase its perceived value. If content creators see that producing original work has value (and perhaps protection from being blindly scraped), they’ll continue to enrich the pool of real data. Some experts have called for a new content economy where creators receive ROI (return on investment) when their data is used by AI, to keep the incentive to create alive. In summary, we need to make sure humans keep writing, drawing, and coding – it’s the intellectual fresh water that prevents the well from going rancid.

- Regulatory and Technological Interventions: Governments and standards bodies are waking up to the issue of AI content flooding our information ecosystems. One idea is to mandate transparency – requiring AI-generated content to be clearly labeled or watermarked. Legislation is already in the works: for instance, the proposed AI Labeling Act of 2023 in the U.S. would require that AI-generated material be disclosed or labeled in certain contexts (U.S. Legislative Trends in AI-Generated Content: 2024 and Beyond). Similarly, the EU AI Act will enforce disclosure for AI-produced media, and some states have laws against undisclosed deepfakes. These measures won’t directly stop model collapse, but they make it easier to identify synthetic data and thus help with filtering it out of training sets. On the tech side, researchers are developing algorithmic solutions: improved AI detectors, embedding invisible watermarks in AI output, and even new training algorithms that can recognize and down-weight synthetic data during learning. Finally, companies should institute data governance policies – for example, not allowing their next GPT model to train on the raw outputs of the previous GPT without vetting. In essence, a combination of “nutrition labels” for AI content and rules for a healthy AI training regimen can prevent a slide into an endless misinformation cycle.

By implementing these strategies, we can hopefully avoid the worst outcomes. Just as strict feed policies (banning animal remains in feed) effectively halted mad cow disease (Bovine spongiform encephalopathy – Wikipedia), a concerted effort to police AI’s training diet can stave off model collapse. It will require diligence – continuously monitoring the ratio of human vs. AI data – and perhaps new industry standards for dataset quality. The challenge is manageable if addressed early: we’re essentially putting in safeguards against AI cannibalism before it becomes an irreversible problem.

Conclusion: The Risks If We Do Nothing

The analogy of cannibalism is a warning from history. We saw how feeding cows to cows created a nightmare disease that literally punched holes in their brains, and how human cannibalistic practices led to deadly epidemics like Kuru. Now, we must ask a disquieting question: If unchecked, will AI models end up in a death spiral of self-replicating nonsense, just as mad cows and Kuru victims suffered neurological decay from consuming their own kind? The prospect is chilling. An AI that devours only its own synthetic output could progressively lose its grip on reality – a fate we could call digital dementia. Such models might become confident propagators of falsehoods, trapped in a loop of deteriorating knowledge. In a world that increasingly relies on AI to augment human decision-making, a collapse in AI reliability would be a profound crisis.

However, this future is not set in stone. Unlike cows in a field, we humans can recognize the danger and intervene. The future of AI knowledge depends on choices we make now. By ensuring our AI systems continue to learn from genuine, diverse, and factual information (and not just regurgitated AI “slop” (Model collapse – Wikipedia)), we keep their minds robust. We must be vigilant stewards of AI’s training diet – curating data, establishing standards, and reinforcing the value of human creativity.

The story of cannibalism teaches us that a system consuming itself cannot thrive. Just as societies learned to outlaw or strictly regulate cannibalistic practices for the good of all, we must instill practices that prevent our AI from gorging on its own synthetic regurgitations. The concept of “digital cannibalism” is more than a metaphor; it’s a real technical concern with high stakes. Will we heed the warning? The hope is that with awareness and action, we can ensure our AI remains healthy, knowledgeable, and innovative – not a mad machine eating its own tail. The tools and strategies are at our disposal. Now it’s up to us, the creators and consumers of content, to prevent AI from falling into the cannibalistic trap and guide it toward a future of factual, creative, and truly intelligent output. The brains of our AI (and by extension, the collective digital knowledge of humanity) are worth saving from such a fate.

Leave A Comment